DATAVERSITY Demo Day 2024

45:45

45:45

Three leading vendors joined forces to share the best tools and services for data governance success in this year’s DATAVERSITY Demo Day. Check out the session now to learn about powerful offerings that make it easier than ever to govern data and comply with growing regulations.

You’ll learn how to:

• Increase data value, maturity, literacy trust and more with erwin® by Quest® solutions

• Use unrivaled data intelligence automation to support your data governance initiatives

• Deliver business value through data governance

• Use data lineage to simplify regulatory compliance

Learn More

Show Transcript

Hide Transcript

Hello and welcome. My name is Mark Horseman, data evangelist with DATAVERSITY. We would like to thank you for joining the DATAVERSITY Demo Day-- Data Governance. We're very excited to have you join us for this event set up to give you an overview of tools and services available for your enterprise data management programs.

Just a couple of points to get us started. Due to the large number of people that attend these sessions, you will be muted during the webinar. For questions, we will be collecting them via the Q&A section. If you would like to chat with us or chat with each other, we certainly encourage you to do so.

And just to note, Zoom chat defaults to send to just the panelists, but you may absolutely switch that to network with everyone. To open the Q&A or the chat panel, you may find the icons for those features in the bottom middle of your screen. To answer the most commonly asked question, as always, we will send a follow-up email to all registrants within a couple of business days containing the links to the slides.

And yes, we are recording. And we'll also send a link to the recording of this session as well as any additional information requested throughout the webinar.

Now, let's kick off our first session of the day with erwin by Quest. And let me introduce our speaker, Nadeem Siddique. Nadeem has been with erwin by Quest brand for over five years now. He has worked with customers globally, helping them solve their data management, data governance challenges. Nadeem provides product demonstrations, leads presale efforts, and keeps active engagement and value attainment initiatives for customers. He works for all things erwin Data Intelligence. With that, I'll hand the floor over to Nadeem. Hello, Nadeem.

Hey, Mark. Thank you. Thank you. Thank you for that introduction. Hello, everyone. A very good morning to everyone, evening and afternoon if anyone is joining us from a different time zone. I am Nadeem Siddique, solution strategist at Quest.

And I thank you all for joining me today to explore the topic of "Delivering Business Value through Data Governance." Mark, I hope you can see my screen and hear me well.

Yes and yes.

Perfect. As we know, data is one of the most valuable assets for any organization. And its true potential can only be realized through effective data governance. This is not just about compliance and security. It's about harnessing data to drive strategic decision-making, innovation, and growth. It's more than just applying guardrails. It's about redesigning how we guide and operate with the data to bring in the value and alignment with what the business is trying to achieve.

And by shifting the perspective from merely defending against risks to actively leveraging data, organizations can uncover new insights, optimize operations, and create significant competitive advantage. And what we've seen is that by enabling data self-service, it can enhance data governance initiatives by empowering users and by fostering a data-driven culture.

It can drive continuous improvement, reduce bottlenecks, promote compliance and security, and the list really goes on. With the erwin by Quest portfolio, the solution offers a broad capability and competence in an integrated platform. It's really a one-stop solution.

Now, the erwin data modeling product, it's been there for over 30 plus years. It's loved by data architects and it's used to create logical, physical, and conceptual models. And really, we're talking about governance, so it helps you do governance at the architecture layer itself. Everything else, apart from the modeling, is included as part of the data intelligence component, right?

The catalog, it allows you or it acts as a centralized repository for you to store all your metadata coming in from different sources. It becomes a central point for you to govern your technical assets, enrich them, and also increase their availability and discoverability. Now, currently, we are in the process of a newer release for data quality, which further enhances the capabilities that were there.

But its integration to the solution as a whole and the data catalog helps you build an understanding of the quality of data in the sources that you've already documented and cataloged. The data lineage, as you know, helps you investigate data flows, monitor the health of your pipelines, and all of this through our inbuilt connectors. These connectors are owned by us, managed by us, and maintained by us as well.

With the data literacy component, the solution helps to curate and govern everything through workflow processes and have stewardship set up to ensure accountability across organizations. And on top of it comes the data marketplace. And the data marketplace becomes the entry point for self-service for business users.

Now, the seven steps to maximize value of data, with the different components that we just discussed. At erwin, we have a clear path to provide organizations with ways and means to maximize the value of data with these seven steps that you're seeing over here. And because we recommend a model, first approach, and a lot of our organizations are taking that approach, everything begins with data modeling.

And then it can be carefully cataloged with the help of a broad range of out-of-the-box connectors, as I mentioned earlier. And it can be curated with business context and governed by applying policies and rules. Now, with integrated data quality, it brings you and gives you a means to observe your data and helps to score it.

Now, this observing and scoring builds trust in data and provides a well-governed, high quality trusted data available for business users to shop, share and compare in the marketplace at the top layer that we were talking about.

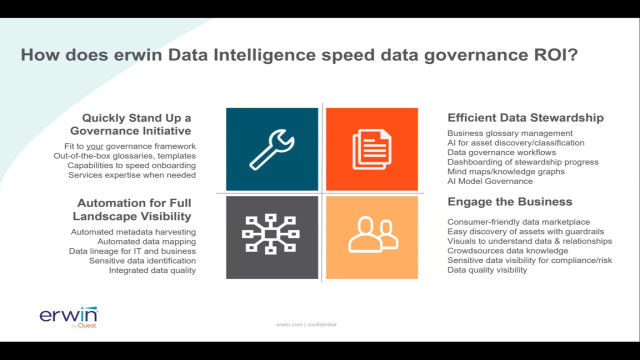

Now, how does erwin speed data governance ROI? Erwin Data Intelligence helps to implement a data governance framework that best fits your company. Also, assistance and knowledgeable expertise is provided to get data governance teams going quickly without the lengthy services engagements. erwin provides landscape visibility, governance capabilities, and automation for data stewards and owners to see and understand technical data assets across the enterprise and unite assets with business terms, policies, rules and other business contextual assets.

erwin also provides the ability to easily socialize high value available data, with guardrails and recommendations for use among business users and easily crowdsource data knowledge, and encourage engagement through self-service, as we were talking about the importance of self-service earlier as well.

AI governance has been the talk of the town of late. And as organizations are increasingly integrating artificial intelligence in their operations, AI governance has become very crucial. Effective AI governance will ensure that AI systems are developed and used responsibly and transparently. It will address critical issues such as biases and fairness.

AI has been built and explored and is now accelerating down the roller coaster, and thus, need better and trusted data, and that quickly. And erwin provides you with the means to do that. And we'll see that as part of the demonstration today as well.

And before I get started with the product demonstration, with the actual solution, what I want to talk about is that in my previous presentation at DATAVERSITY Demo Day, I talked about accelerating the delivery of data products. And as organizations are moving towards taking a data product approach, it becomes important to have a plan to deliver these data products and deliver them faster.

From our perspective and with the capabilities that we have, we divide it into three major steps-- modeling for access, catalog for ownership, and data marketplace for its value. Now, with modeling, as you know, we are trying to build that blueprint and have that blueprint in place and understand that for these products, what are the data that's included as part of it.

The catalog piece helps us understand what data is there, where is it located, how it is moving from source to target, who owns the data, and who created it as well. And as I said earlier, the marketplace becomes the entry point for data consumers. And this is exactly where we also begin our presentation with.

So with that, let's get right into the solution. And what you're seeing at this point in time is the erwin marketplace. And I talked quite a bit about delivering data, products and governance around all assets, products included. So with what we are seeing right now, let's talk about self-service. And as we progress with the presentation, what you'll also see is that with the different screens and with the different capabilities, we'll also bring in different personas as well, which really goes into say that the solution caters to different personas.

So for someone who is trying to self-service, trying to understand what's available, you can use this Google-like search that's available over here. And the solution starts to tell you what are the different kind of assets that are available. Are there data assets? Are there data products? What is available? The solution goes ahead and gives you visibility into that.

So you can really start to self-service using the marketplace, drill down into any of these cards that are available, and start to understand and analyze what's available. Let's take the example of data products, as I was talking about data products. And with that, what the solution does for me is it gives me an inventory of these data products-- 16 in number in total.

Now, the difference between the data products that you see in the erwin marketplace versus if someone documents the data products on their own, is that this is an inventory of well-documented, well-curated, well-governed and trusted data products. And how I use all of these objectives for this data product, we'll discover them and we'll explore that as we progress with the presentation.

So to begin with, this is, as I said, an inventory of trusted data products. And it's been governed through workflow processes as well. It can be segmented and categorized based on different categories or domains, if you will. And what we see over here are these badges of gold, silver, and bronze. Now, just by looking at it, the consumer, the person who's trying to sell service for data can come to a conclusion that the gold one definitely seems better than the ones which are silver or bronze.

So, of course, the person who is trying to self-service will go ahead, pick the one which seems better. But even before doing that, because there are quite a few which are available in the gold status, what you can do is you can quickly use the marketplace capability to compare these different data products to each other. And as you click on that button of compare, the solution brings these different data products in a side by side comparison view for you.

And over here, you can really analyze them and understand them in a competitive analysis, as you do any product on an e-commerce site today. So in addition to seeing that these are all gold flag data products, you can also see data value scores. And the different components that we talked about-- the quality, the catalog, the literacy-- all of that really combines to build and contribute towards these.

Now, these data value scores that you're seeing in the badges of gold, silver, and bronze are made available not by manually flagging them. So we do not manually flag them, but we go ahead and we-- automatically, the solution does it for us. It takes the data quality scores into consideration. It takes the ratings of the end user into consideration. And it takes the completeness, how enriched, and how informative these different data products that we're looking at are.

If you look at this definition, do we get a good understanding of what this data product is? People have rated around it, so there's rating available. There's information about sensitive data indicators. So in the background, in the glossary, or in the catalog, that tagging and that classification is also happening. And that's how we have this information built in over here.

So there are two words. There are custodians who are working in the background. And we also get visibility around who those two words could be, who those data custodians or technical data stewards could be. So we get all of that information in the side by side comparison. We get to know what is the business initiative for these data products. And thus, the consumer can go ahead and conclude that one product compared to the other is fit for use for their purpose.

And right from the marketplace itself, they can go ahead and request access for this data product, which, as we just discussed, is well-documented, governed, and trusted. And we say that because we've applied governance through literacy and catalog. We've put the trust in the data through data quality as well.

And right over here with this screen, as I was saying, you can, with the click of a button, request access for these data products to the people automatically who, as we saw, were in some way or the other responsible for these data products. And who have access to provide access to others as well.

So from here, what we've seen so far is that the marketplace which erwin provides you for a self-service perspective, from a self-service perspective, allows different users to go ahead, search for anything that they're interested in, search for data that they're interested in, compare that data, understand it better. And once they've had that trust built in it, go ahead and also request access in that data product, or any data asset for that matter.

Now, that was the first persona that I talked about. For self-service, a read-only user. Now, from here, let's go ahead and drill down a bit further into the concept of this data product, and see where all of this data is really coming from. So once I click on this particular card, what I get to see is I see the information that I have already explored in the compare screen as well.

But in addition to that, I see associations. And we have one of our key features, which is called the mind map. Using this mind map, what I can do is I can get a 360-degree view around my data product, which in this case is customer care, which I found after my analysis that it's the best product, because it was gold rated, it had the best data value score as well.

Now, on the left hand side is all my technical associations. And on the right hand side, I have all my business associations. So I can go ahead, explore it one by one. Or I can click on this, expand all button. And it brings the mind map to its full capability and shows me all the different associations that exist over here.

So as I was saying, now that we're trying to understand this data product and all its associations in a more detailed fashion, and we're going beyond just the data consumer who is good with finding trusted data and then using it, now we want to investigate and understand from maybe a business analyst perspective where is this data coming from. Because I want to create certain reports. And I also want to understand what other reports exist, probably.

So on the left hand side over here are all my technical associations. This data is part of the warehouse. The customer's table is one of the sources, which is feeding data to this particular product. I did talk about the model first approach. So we can also see that this product was modeled using this particular data model. So I even have the information of what model was used to create this particular data product.

The left hand side, as I said, talks about the technical associations, what data do I have. Where is that data? Is that sensitive in nature or not? Was it modeled or not? So I'm getting all of those answers from this mind map. And on the right hand side, what I have is I have the business associations. Do I have regulations? Do I have policies in place that are governing my data, which I am consuming or trying to consume, which is part of this data product?

So from a compliance and regulation perspective as well, the solution is taking care of it and bringing that information together for you so that you can view all of that from the mind map itself. So from understanding what data do you have to understanding whether that data is governed or not, are there regulations in place for this particular data or not? The solution is automatically doing it for you.

And if I am to break it down for everyone how this is happening, the technical side of the house is everything that we are managing in the catalog. And the business side of the house is everything that's been governed and maintained through the data literacy, through the business glossary components that we have. And everything comes together, interoperates together. And with the marketplace, it allows the end users to consume that information which is presented in the form of trusted and well-governed assets.

So that's how everything, all the different components come together and deliver value to the end users. Now from here, what we can do is we can introduce a more interesting or more technical person. I wouldn't use the word interesting compared to other personas, but a more technical persona. And let's say for that persona, what we want to do is we want to investigate not just the sources that are associated or that are feeding data to my product, I also want to understand and look at the lineage. And I did talk about that, a solution also provides lineage with the connectors that we have.

So from here, you can click on a particular asset. And you navigate to that. And you know these abbreviations that we're seeing over here-- TA, EN-- these abbreviations are expanded in the legends at the top. So TA is my table, EN is my environments, which is really databases, so on and so forth. So all of that information is available for me over here.

And each of these different assets are all navigable. So I can click on a particular policy, if I'm interested in a particular policy and I'm part of the compliance team, I can bring up that policy, read about it. If there is a policy document, I can bring that policy document, read about it as well. So all of these assets are navigable.

But coming back to where I was, I was talking about if from here, what I want to do is I want to investigate lineage about-- customer is one of the tables are feeding data to my product. But how is data coming into that particular table? So from here I can bring up the technical property of this particular table. If there is a definition around it, it becomes available. Who is the steward for this, or probably the technical data steward for this? These are all the technical properties of the columns which are part of this table.

And with the click of a button over here, what I can do is I can go ahead and bring in the lineage information. So now this is the horizontal technical lineage. Sometimes when I bring up the mind map, people ask me whether that's lineage or not. When we talk about lineage, this is the horizontal technical lineage that helps you monitor your pipeline, that helps you understand the data flow as part of your landscape. So this is that lineage that I'm talking about.

And again, with the connectors that we have, the lineage is built by us. All the connectors are managed and maintained by us and owned by us. So this lineage, which in this example has a few Tableau dashboards, Power BI reports, SSIS, as you know, an ETL over here, can be configured in a way with the technologies within your landscape to understand them. And a similar diagram or a similar visualization will be present with your technologies in place.

And this is where we go, really deep, really technical into governing and understanding, and giving you the understanding of looking at your landscape as well. So from here, what I can do is I can drill down not just at the application or the database level, I can drill down to the column level as well. And let me do that within the SSIS layer here as well.

And as I do that, what you see is you see these blue T's start to appear. And these blue T's are really the transformation rules that have been applied. So if anyone has this particular question that is their manual intervention or is all of this happening on its own, the answer to that is that this is happening through the smart data connectors that we have. It has the capability to connect to your reports, to connect to your ETL, stored procedures, and scripts and extract transformations, extract data flows, and give you a similar lineage visualization with your technologies in place.

And again, with a production environment where there will be humongous volume of data, and if someone wants to investigate a particular field which appears or which exists as part of their dashboard, all that they need to do is click on that field and the solution automatically blurs everything else and lets them focus on that one particular part.

And if there is any discrepancy, if there is any issue in the transformation that was added, if there was any issue with the data movement that's been happening, it's an easy call from here for someone like a business analyst who's trying to understand and analyze discrepancy, to get rid of it, and, work with the ETL developers in collaboration, reduce time and effort and increase ROI, when we talk about lineage.

So with the different data connectors that we have, the solution really allows us to go ahead and give you that end-to-end visualization of your data landscape as well. Now, from here, we can do multiple things. I can go into data quality. And I'll do that as well. But because I talked quite a bit about AI governance, so let me also showcase how erwin Data Intelligence allows you to leverage the marketplace to even look at what AI models do we have, and are we following the guardrails, are we following the regulations that need to be in place around those AI models?

So similar to how I looked at a data product, I can bring up the different AI models and I can document my AI models, coming in from different sources. Let's say this fraud detection AI model. I can read all about it. I can understand it in more detail. But what I want to do is I want to understand, do I have all the guardrails around it or not?

And with my fraud detection AI model, I have, in addition to the business information, even the technical information. So I have my AI model. It's been trained on a certain data. Whether the quality of that data is good or bad, that information helps you get and derive better value from these models, but in terms of governance.

So that information, of course, will be given to you on the left hand side, as we saw in the product as well. But let's explore the right hand side of the model. So do I have those guardrails around my AI model? So the solution with the marketplace capability allows you to look at your AI models as well and analyze whether the Bill of Rights that's being talked about in the US White House is in place or not.

There are quite a lot of other recitals that need to be in place, when we talk about the European region. So are they being considered? Also, is there third party data that's being used. So we also can give you visibility around the third party data that's available. And all of this, again, as I said, because the solution provides you with the capability of interoperating components that come together to give a visualization, something as rich as this.

So now that we've understood that for the AI models there's guardrails there's regulations, there's AI risk policies, also, what third party data is being utilized, let's talk a little bit about the quality of data that is being used for this particular AI model. And for that, we have this integrated data quality component, which allows us to look at the data sets that are part of it, the data that's feeding my AI models.

And this, as I said, is the integrated data quality component, which is quite rich in itself. And it allows the users to do data observability, which is proactive. Plus there's a lot of capability of augmented data quality. So two major differentiators when it comes to data quality that we provide today, which in turn enriches my models and the sources that are feeding to my models and helps me understand the quality of data.

So within data quality, the two major differentiators are the fact that we are today combining augmented data quality and data observability. Now, what I mean by that is if I look at this particular data set, I have these suggestions coming in from the solution. So I have scanned the Snowflake Data source. And the solution has found, of course, fields like cities, and first names, and genders, and Social Security number.

But the solution has automatically applied rules on top of those fields, which should be applied for a Social Security number, which should be applied for a first name or gender. And the solution is doing this automatically. And that's what augmented data quality is all about when I talk about data quality as part of erwin's capability.

And from here you see these, you know, accept or reject buttons. So this comes in as a feedback that you as an end user can provide to the solution. Whether this recommendation of a rule that has been applied on the city field is accurate from your perspective or not. So based on your feedback, the solution learns from it. And it goes ahead and then takes that into consideration and comes back with those recommendations in context to what was provided previously.

So this is how the solution automatically applies rules without you going in and manually having to apply those rules yourself, thus reducing effort on your end. There's a lot of-- we quite get this question about notifications and alerts. And again, interestingly, what the data quality component is capable of doing is it proactively provides alerts to our users.

And what we see over here, I've been provided with an alert around freshness, I've been provided an alert around duplicates, and so on and so forth. So I've been provided with five alerts which are proactive. So the solution automatically, after its scanning, is looking at the source and telling me that these, these, these are the issues that we've identified. You should have a look at it.

So these alerts are also proactive, similar to a lot of data observability that we do as part of the solution, which is quite proactive. And from here, from the overview level where I was talking about the data set and the rules automatically being applied, if we drill down a little bit, right, if we drill down at the column level and start to look at the different fields, to begin with the solution is giving me distribution.

The scores are, of course, available there. But the solution is giving me distributions based at each field level how much of fill percentage do I have, how much of nulls or blanks do I have. And that distribution is clearly available to me over here at the overview level, even without drilling down. And then because we saw that there were rules applied automatically, what it's also doing is it's classifying your fields to sensitive indicators.

So if there is a Social Security number, if there's any field of that nature, which should be marked as PII, the solution is automatically doing that for you without you manually having to go in and do that. So that's, again, the power of the data quality component. And why I'm talking and spending some time on the data quality component, because I talked about trust, because I talked about the delivery of data products and trusted data products.

Data quality plays a key role in that whole process. So really important to talk and highlight about these capabilities. And finally before I wind up and move on to questions and answers, I talked about data observability. So now from the data set level, what we're looking at is we're looking at the field level. The score at the data set level is at an 83.2%. But if I look at it at the field level, my score is just 49.7%

So there's definitely some issue at this field level. And the solution gives me that distribution with respect to data quality dimensions. So across completeness, is there any issue? No. Because all the fields are complete, I do not have nulls, blanks, or empty spaces. But there's definitely issue when it comes to uniqueness. So there's a uniqueness issue. There's a lot of duplicate or repeating values that I see within this particular field.

And then this is really the highlight, where you or your data engineers need not go in and automatically-- need not go in and add rules to find reliable data. The solution is doing that for you. The solution has these measures which are constantly looking at your data and giving you results based on those values. So you do not need to go in and add a check for finding duplicate data. The solution has it.

All you need to do is click on the buttons, which would activate it and make it available for you and will include that as part of the scoring as well. So with augmented data quality, with data observability and no code automation which is available in forms of these measures, it provides a lot of ROI, removes a lot of effort that your data engineers would otherwise spend in trying to find that reliable data that can be used.

And with all of this being done with the data quality engine, all of that information comes back over here in my data quality, in my Data Intelligence suite. And I can look at the data quality scores as well. So with the click of a button over here, with whatever scores the solution profiled and came back with in the data quality component, I can look at them and analyze them with reference to my AI models, with reference to my data products.

And I can come to a conclusion that the data in this case, which is feeding my AI model, is good or bad. Of course, with good data getting into my models, chances of getting better results with which we can take some good business decisions is more likely to happen versus the other way around.

So with that, the different capabilities of the solution really come together to provide you with different assets that are governed, that are of good quality, and that are trusted. So Mark, I think with that, I've kind of hit the mark of the presentation. And if we want to open this up to Q&A, we can definitely do that.

Yeah. We've got some fantastic Q&A in Q&A, and we've got some fantastic discussion in chat. So, I don't even know where to start. These are some fantastic questions. I did get one DM that I'd like you to address. A lot of data for us is sitting in spreadsheets that go into Snowflake for us. Does erwin show lineage to these Excel spreadsheet sources?

That's a great question. And that allows me to talk about one capability, which is, again, one of the differentiators for us. So apart from these automatic lineage stitching through the smart data connectors that I was talking about, there is an integrated module called the Mapping Manager. And this Mapping Manager really allows you to stitch source to targets to each other.

There's a lot of AI that will help you to do away with the additional effort of mapping one source to other. Because there's AI as part of it, which will, let's say for an example, map an ADDR to an address field So it has that kind of intelligence. It will allow you to do that. The answer is an absolute yes, that you can definitely mark the source to target mappings from spreadsheets to your warehouse.

Absolutely, the solution will build that out for you. It will give you a similar lineage, as I showed you in the example as well. And on top of it, the Mapping Manager also acts as an inventory of all your source to target mappings, whether, as in this example, we are doing it by using that mapping and stitching it from flag files to lake or a warehouse, plus the ones which are maybe directly coming in from reports which are coming in from ETL.

So if you want to look at it, maybe tweak it a little bit, add certain business rules on top of it, all of that is also available. Great question. Thank you for bringing that up, lets me talk about a differentiator.

We did have one great question from chat. How much effort and tool sets go into producing that valuable view of the data landscape? And this came up around when we were looking at the knowledge graph, the mind map.

Sure. Sure. Again, great question. When we talk about the mind map, and let me quickly bring that up again. The heavy lift really is when you look at the technical side of the house. There are technical assets that need to be associated to my data product. And really, to do that, there's an AI match module that's available. So the AI match module clubs, the glossary assets and the marketplace assets with the catalog assets and allows you to stitch the technical metadata to your business assets.

So that kind of helps you do away with the heavy lifting and then everything around putting in the guardrails with the glossaries, with the regulations, it's really easy to set up to do. We also have programs like "Day in the Life," where we encourage some of our users who want to get their hands on the solution and see how easy it is to really do all of that.

So it may look like a heavy lift, but really, with the capabilities that are there and with your users being trained, it would be a cakewalk.

Awesome. What data quality dimensions are available out of the box? And what's involved to add additional or custom data quality dimensions?

Great question, again. All of the seven major data quality dimensions are available. And let me quickly show that to you as well. Over here, if I expand, you can see my dimensions and accuracy, completeness, uniqueness. Everything is there.

On top of it, if I want to go ahead and add a new dimension of my own, or add a new measure of my own, the solution is completely configurable in that sense. And you can do that. Easy configurations available to add newer sets of checks, really available. Yeah.

Related, sort of related, is erwin performing data profiling to determine data quality dimension percentage? If yes, I'm guessing there will be a need to provide profiling rules into the tool as well?

That is correct. So it profiles, it looks at patterns, it looks at frequencies, different things, all included. And the results that are populated are inclusive of that. As I said, with the augmented data quality, which is allowing the solution to look at fields and apply rules automatically, what we've done is, to get help people get started and get value quicker, we put certain out-of-the-box terms and definitions and rules with profiling in place, which automatically takes precedence and starts to give you value.

So if you want to tweak them and you want to make changes on top of them, you can definitely do that. But it's available out-of-the-box to leverage and see quick value as well.

Not related, but a fantastic question. How do you handle data, data models, ETL tools for which you don't have a smart connector? And is this critical for end-to-end lineage.

That's a great question, again. Now, data modeling, as I mentioned, we definitely recommend to all our customers to take that data modeling approach. And topic of today's discussion was business value through data governance and modeling, emphasizing on governance at the architecture level itself. But that's not a must for having lineage or built in, right? So that's one part of it.

The other part is if we do not have any connectors, what do we do? We go ahead and build those connectors. So all the different connectors that I talked about today, whether it's from ETL, whether it's from, you know, reporting tools, stored procs databases, we own all our connectors and we've built, managed, and maintained all our connectors.

So we would go ahead and build one for you. We'll understand what your requirements are. But let me mention, we have almost 80 plus different connectors that range from reporting to ETL to data sources. And you would definitely find a fit if you talk to us.

How are the lineage links between assets extracted and captured in the tool on the right side of the mind map? So they're curious about business policies, business terms, AI ethics, like the AI risk policies, those sorts of things. How is that all connected and mapped?

All the business side of the mind map that we saw, the connections?

Yeah.

That's really done through the marketplace or through the business glossary. So really easy steps to get to those. And any data steward would quickly get their hands on it and know how to search for it and quickly associate it. So those are associations done all through the glossary of the mind map-- or the marketplace, sorry.

Excellent. Well, that's all we have time for for this presentation. Thanks to the attendees who have joined us so far. We now have a 15-minute break, where we encourage you to network with each other as you hear the next speaker get set up. The next session will begin right at the top of the hour. Thank you very much, Nadeem.

Thank you, everyone. Great questions. Really enjoyed. Thank you.

Related videos

Practical data governance in an AI era

Watch this conversation with Nicola Askham, the Data Governance Coach, and Michael O’Donnell of erwin by Quest as they discuss practical data governance in an A...

32:12

Govern Your Way to AI-Ready Data and Business Value

Data governance programs have long been driven, and funded, by the need to defend against regulatory risk and improve data quality. However, today’s governance ...

42:44

AI Governance – The State of Data Intelligence, October 2024

This video brief shares top State of Data Intelligence research findings related to the importance and current state of AI governance and AI data readiness effo...

04:24

What Is Data Governance? 2 Minute erwin Expert Explanation

Data has to be accessible, manageable and trusted by those who need it and protected from those that don't. With a good data governance program, organizations h...

02:12